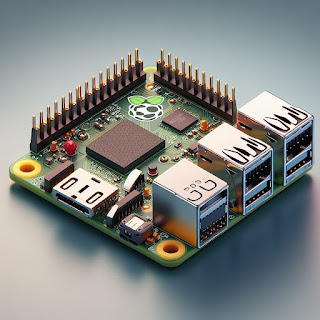

It has been a busy year, and one of the things I have played with the most has been a few of the new AI tools, like Bard, Dall-E, Copilot, etc. The picture on the left is, allegedly, a Raspberry Pi Pico W, but it is not even close. But it serves to illustrate my point: while sometimes you get good or decent answers, sometimes AI is just wrong. The problem is that we use computers to get good answers, and AI is being pushed as a great tool to speed up that process, which sometimes it does splendidly. Unfortunately, it cannot be trusted. Still, during the second half of 2023, we witnessed how AI was touted as a revolution, and it was, to a point, one of the reasons for the bull market that raised many stocks. I guess that during 2024, we will continue exploring this tools, finding out which use cases make sense and where you want to avoid using them. Meanwhile, I convinced one of the image-generating AIs to make me a suitable image to celebrate the new year (it was not trivial as th